Science writing update, April 2023 edition

Social media and mental health; ice baths; hungry judges debunked; dodgy parenting advice; and do science and politics mix? Plus even more interesting links

Welcome to my monthly “in case you missed it” update, where I send you my recent science writing!

Below is just a selection: you can find all my recent articles for the i newspaper—on the scientific U-turn on peanut allergy, on the microbiome and mental illness, on whether neuroscience is relevant to criminal justice, and more—on the i’s website, at this link.

And further below is a selection of interesting science-related links that I didn’t write. It’ll hurt my feelings, but you can skip my stuff and go right to that list if you click here.

By the way, if you’re not already a subscriber to this Substack, you can join more than 8,000 others and put your name on the list right here to get monthly updates:

From a great Haidt

My biggest article of the past month (both in length and number of readers - who says attention spans are dropping?) was this one on social media and mental health. It was a reply to Jonathan Haidt and others who have argued that the jury is now in on the question of whether social media is a “major cause” of the teen mental health crisis.

I disagree. It’s still very much a possibility, but a lot of the studies that are often referenced in this area have much weaker results than I’d want to see before saying anything about a “major cause”. I go through them in the article. I also include some (hopefully useful) finger-wagging about things you shouldn’t do when discussing the data in this debate.

Cool your ardour

I was only vaguely aware of Andrew Huberman before last month. He’s a neuroscientist at Stanford, but mainly he’s known for being a YouTuber and “health influencer”. He put out a tweet at the start of March about the physical and psychological benefits of taking an ice bath and cold showers 🥶. I criticised it, and my tweet got loads of attention:

I was a bit off in that second bullet-point. I was implying that the results I mentioned (i.e. lots of p-values very close to the 0.05 cutoff) were part of the “uncanny p-mountain” phenomenon where authors, consciously or unconsciously, put their finger on the scale to push the results into “significance”. As I said in the tweet, that kind of pattern is prima facie (not at all definitive) evidence for p-hacking.

But actually, having looked at it in more detail, I think this study was subject to the other kind of p-hacking, where you run tons and tons of statistical tests and then just highlight the significant ones, without correcting for multiple comparisons. I explained this in the article I later wrote about the study.

It’s actually much worse than that: Nick Brown looked in even more detail at the study and found all sorts of problems with the data, not least that they published the full name and date of birth of all the participants in their publicly-available dataset. Oops (don’t worry, they’ve deleted that part now so I’m not making the situation even worse by broadcasting it).

This kicked off a whole discourse on Twitter about p-values where some bad-faith critics of the Open Science movement really embarrassed themselves with their daft arguments (for instance, one particularly weird and obsessive guy demanded to know why I was attacking this little-known paper when I hadn’t criticised Open Science luminaries like John Ioannidis… despite the fact I’ve criticised Ioannidis over and over and over and over again - not that this is at all relevant to the perfectly justified criticism of the low-quality ice-baths study anyway).

At any rate, I’ve become a bit fascinated with Huberman, who is fast approaching (or has already reached) online-guru status, and I suspect I’ll have to more to say about him sometime soon…

Bad judgement

Another big one this month was “hungry judges” - the idea that hunger affects the way judges make decisions. This is one of the antediluvian (that is, pre-Replication Crisis) psychology findings that a lot of people know about, mainly through the book Thinking, Fast and Slow, but where the criticism doesn’t seem to have filtered through. Most people know that, say, power-posing has crumbled, but not so for hungry judges.

My article on this was occasioned by a new study that found the opposite result to the original 2011 PNAS study: in this one, the judges (Muslims who were fasting for Ramadan) made more lenient decisions rather than harsher ones.

I thought the results were quite ambiguous in the new study, and just to be clear I don’t think this is a “failed replication”, because so much of the new study is different from the first one. But I do think that the small, noisy effects in the new study should remind us that the apparently enormous effect of hunger in the original hungry-judges study was never plausible to begin with.

Tummy Wiseau

Last month it was bottle-sterilising; this month it’s “tummy time”. Yet another thing that parents are recommended to do by health authorities (like the NHS here in the UK and the National Institutes of Health in the US), but which there’s very little decent evidence for. I wrote about how this is probably harmless, but also if your baby hates it—and I have since heard many anecdotal accounts of exactly this from parents—you really don’t need to worry.

As a new parent who’s seeing all this stuff in real-time, I’m keen to look into other parenting recommendations - basically like a cut-price, male Emily Oster. Feel free to send me stuff (stuart.ritchie@inews.co.uk) you think might be worth investigating!

Science and politics and parapsychology

You might remember my piece “Science is political - and that’s a bad thing” from this Substack last year, and also my dialogue with myself on whether scientific journals should publish political content.

The recent debate over Nature’s endorsement of Joe Biden in 2020 makes me lean much more towards the position that journals should just keep out of politics altogether - I explained why in this article.

At the risk of sounding naïve, maybe one way to reduce the influence of politics on science is for people with opposing attitudes to work together on scientific projects. I was thinking about this when I read and wrote about a new replication attempt of the infamous Daryl Bem psychic-powers experiment - the thing that got me into this whole business of replication in the first place.

Sceptics and believers got together and designed an experiment that they all agreed would convince them, whichever way the results turned out. As I described in the piece (£), they went to extreme lengths to make the study ultra-transparent and impervious to bias, fraud, or anything else. It was a really remarkable study to read. Can you predict its result…?

If parapsychologists can do this for an experiment on psychic abilities, couldn’t we do it for lots of other contentious—even politically-contentious—questions? For example, to return to the very top of this newsletter, couldn’t it be possible for sceptics and proponents of the social-media-causes-psychopathology hypothesis to work on a bulletproof study that would really move the debate forward? What about other issues where there are clear “camps” on either side of a scientific debate?

Things I didn’t write but that you might like anyway

Some other recent links that you’ll probably enjoy:

You know that feeling when you click a link in an article which ostensibly backs up some claim or other, and you can’t find any reference to it in the link - or even find that the link says the opposite? Well, it happens in scientific papers too - a lot. A recent study examines citations in psychology papers, and finds that 9.3% of are a bit wrong, and a further 9.5% completely misrepresent the research they’re apparently citing. Checking citations is another thing peer-reviewers would, in a perfect world, be doing, but of course they don’t have the time. Could flagging up dodgy citations be a future job for GPT?

Ian Hussey looked at the raw data in a Twitter thread and found that misleading citations ranged from 0 to 50%, and completely misleading ones from 0 to 30%. Who on Earth is reviewing the papers where a third of the citations are completely wrong?!

Scientists Behaving Badly update: not only was there this story in El Pais about the Spanish chemistry researcher who publishes a paper every 37 hours(!?) who has now been “suspended without pay for 13 years”(!?), but the physicist Nicola Tomasetti has been tweeting some incredible examples of scientists self-citing to an absurd extent, clearly in an attempt to game the system and boost their h-index.

After criticism—and after several years—the authors of a 2017 article in Nature Human Behaviour that claimed suicidal thoughts and suicide attempts could be predicted extremely well from an fMRI brain scan have agreed to retract the paper. It was always an implausible result, and it turns out their machine-learning method dramatically overestimated its predictive ability.

Aside from anything else, fans of the UK version of The Office will do a double-take when they see the last author’s name.

Something I’ve often wondered: why is it that certain scientific fields get the “replication crisis” bug and others don’t? Obviously psychology and to a large extent neuroscience have been affected, as well as parts of economics and medical research. Another field that seems to have a disproportionate amount of self-critical stuff, much to its credit, is ecology & evolution. I guess just by luck a few papers appear, and then you have path-dependency effects where other people within the field start looking at the same thing. I was thinking about this while reading a new paper that looks at publication bias in ecology & evolution and concludes that many findings in that area “are likely to have low replicability”.

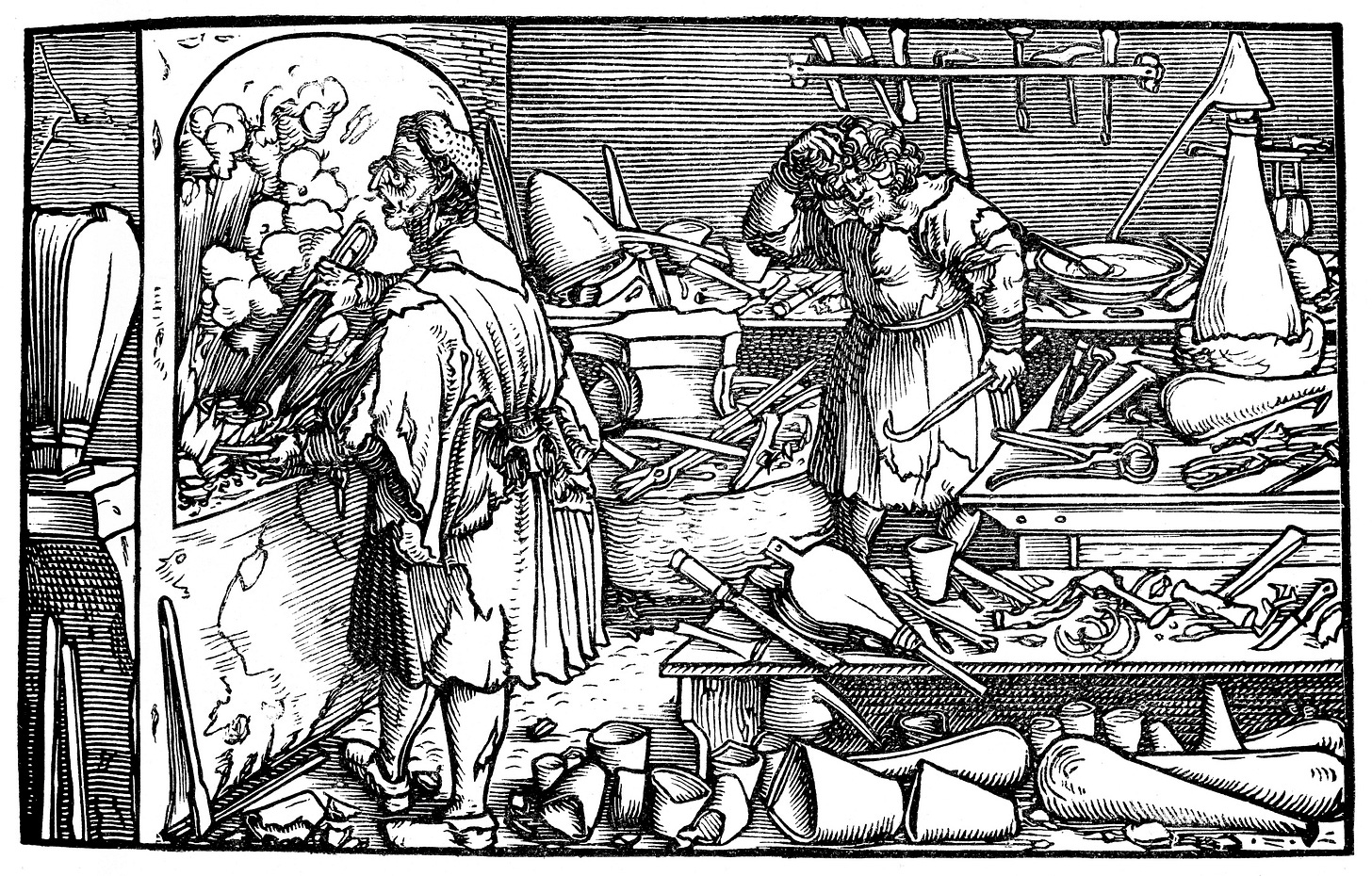

Do you know about Age of Invention by Anton Howes? It’s a Substack that’s overflowing with original, deeply-researched history, all related to progress and innovation in science and technology. Recently, Anton has been looking in detail at the career of James Watt, the Scottish inventor who massively improved the steam engine and helped drive the Industrial Revolution.

Relatedly, you might also notice that I’ve finally gotten around to using the “Recommendation” function to point you towards other Substacks that are always worth reading. The list—which I think will randomly cycle through the many Substacks I’ve picked each time you visit the page—is on the right-hand side of the homepage.

Image credit: Getty

The self citation stuff made me laugh out loud. Incredible chutzpah and editors out to lunch as usual.

The invalid citations thing is good to finally get some hard data on. I noticed that when reading COVID papers but when I tried to tell people nobody really believed me, it seems. The idea that prestigious scientists would routinely add fake citations to their work just seemed so far out of the Overton window of possibilities that it just makes you sound crazy. Yet I find it happening all the time in public health research. And yes it's weird how some fields are more reflective than others. No surprise it's psychology that's investigating bad citations; it's weird, when the "crisis" kicked off my respect for psychology plummeted and I thought it must be one of the worst fields. In recent years my respect had been increasing again, because I learned that other fields have it even worse but don't even talk about any problems let alone try to fix them.. At least psychologists do a good impression of caring.

Dude you're accidentally making it look like what Khaneman did was no big deal. He makes his point with a super sketchy study, but no worries because 12 years later a better one will also show that "hungry judges tend to fall back on the easier default position." ARgh?! ok, I promise to STFU about it now. I still love you.